Openapi Empowers APIs with MCP

Model Context Protocol: A comprehensive guide on how it works and how OpenAPI is using it to make APIs even more accessible.

Model Context Protocol (MCP) is the new hype in the tech world—and beyond. Everyone is talking about it: it’s showing up in GitHub repositories, YouTube videos, and is becoming a key topic at many industry events. But what exactly is MCP, and why might it be a real game-changer in the field of artificial intelligence?

What is the Model Context Protocol (MCP)?

The Model Context Protocol is an open-source, open standard protocol launched by Anthropic in November 2024. Anthropic is a leading AI company and a direct competitor of OpenAI, known for its Claude LLMs.

However, MCP only gained widespread attention a few months later, in early 2025, following growing interest in AI agents and public statements by high-profile tech CEOs such as Sam Altman and Sundar Pichai about their use of the protocol.

MCP was created to solve a very practical problem: improving the quality and relevance of responses generated by large language models (LLMs), which often suffer from a lack of context, resulting in incorrect or irrelevant answers (known as hallucinations).

The protocol defines a universal standard for how applications can provide context to LLMs, enabling seamless communication between models, AI-based applications, data ecosystems, business tools, and development environments.

For this reason, MCP is often compared to a sort of "USB-C for AI".

How does MCP work?

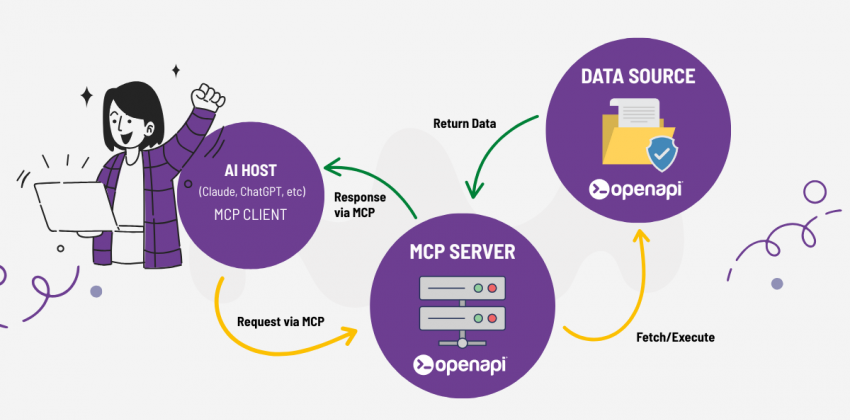

As mentioned, MCP is a standard that allows developers to build connections between data sources and AI tools. These tools can either expose data via MCP servers or act as AI applications (MCP clients) that connect to those servers.

MCP uses a simple and modular client-server architecture:

-

LLM: The large language model that interprets user inputs and any additional context.

-

MCP Host: The application that runs the LLM.

-

MCP Client: A component that maintains 1:1 connections with MCP servers and transmits context to the LLM.

-

MCP Server: A service that exposes data, tools, or functions through MCP.

The MCP server can securely access files, databases, or online services (like GitHub or external APIs) and provide this information in a standardized format to the MCP client, which in turn passes it to the LLM to enrich the context and improve the response.

Why MCP Matters for Developers

Before MCP, every AI model or app had to integrate each individual data source or tool manually, leading to a complex M×N integration challenge (where M is the number of apps and N is the number of data sources). MCP radically simplifies this scenario into an M+N problem, making development much more efficient.

AI developers no longer need to build custom interfaces for each model or data source—they can create agents or workflows at a higher abstraction level. They can switch between AI models or tools with ease, without rewriting code or depending on a specific provider.

MCP vs API: What’s the Difference?

MCP and REST APIs might seem similar—both allow requests to external services—but they differ substantially.

One major distinction is how requests are made and responses are handled. With REST APIs, requests are made to defined endpoints with specific parameters and return well-structured responses, but the response is not interpreted.

MCP, on the other hand, allows requests via natural language prompts and provides additional context to LLMs, enabling them to reason about when and why to invoke tools, supporting goal-oriented logic.

Another key difference: MCP is a session-oriented standard, allowing AI agents to maintain rich, persistent interactions with backend services.

Unlike stateless REST APIs, MCP supports:

-

Session memory across interactions

-

Structured tool invocations for complex operations

-

Streaming support via Server-Sent Events (SSE) or HTTP

However, APIs can still be a core part of the tools exposed by an MCP server. For example, when an LLM decides which tool to use, that tool may involve calling an API and providing the response back to the LLM.

MCP Use Cases: From GitHub to Salesforce, Postman, and Canva

The adoption of MCP opens up incredible opportunities—even outside strictly technical fields.

Developers can now use AI agents to review and write code, seamlessly accessing environments like GitHub to detect errors, debug, and automate repetitive tasks.

But MCP's potential goes well beyond software development. It can also be used in content creation, customer support, and other knowledge-intensive tasks, thanks to its ability to access technical documentation and product information.

Several companies have already announced MCP integration into their workflows:

- Salesforce: Now enables teams to securely query CRM data using AI agents, without needing licenses or training. Developers can automate tasks like deploys, queries, and tests using smart prompts within their IDEs. IT leaders benefit from centralized governance to manage policies, permissions, and access across all MCP connectors.

-

GitHub: Uses MCP to automate coding tasks, integrate external tools via context-aware actions, and enable cloud-based workflows accessible from any device—no local setup required.

-

Postman: Offers visual creation of MCP agents without server code or hosting. With a single click, you can launch an agent in seconds and start testing or integrating it with Agent Mode or other conversational agents via remote MCP support.

-

Canva: Soon, thanks to MCP, users will be able to create and edit Canva projects directly from a chat. A single AI command can generate presentations, resize visuals, or import/export assets—without ever leaving the conversation.

How Openapi Is Implementing MCP in Its Applications

We’re excited to share a major development at Openapi: Our technical team is currently building an Openapi MCP server, leveraging one of the most innovative technologies in the AI-API ecosystem.

With this new infrastructure, AI applications will be able to interact in real time with our API library, making complex requests in a natural, contextual, and fully automated way. This means, for example, that it will be possible to:

-

Access over 400 API services and millions of records using a simple text prompt

-

Generate, filter, and enrich information dynamically

-

Integrate smart workflows with external systems without writing complex code

-

Enable conversational AI agents to manage API-based tasks

The Openapi MCP server will unlock entirely new ways to use our APIs, making integration with AI systems easier and accelerating development, testing, and automation.

We’ll be sharing more details soon, including release plans, technical documentation, and the first available integrations.